This article originally appeared in The Bar Examiner print edition, December 2016 (Vol. 85, No. 4), pp 16–28.

By James A. Wollack, Ph.D., and Mark A. Albanese, Ph.D.We know from experience that some examinees attempt to cheat on the bar examination. Growing awareness of potential cheating and increasing sophistication in detecting cheating have contributed to an increase in the number of requests submitted by test administrators for statistical analysis of examinees’ answer sheets. Cheating is not a victimless crime. It gives an unfair advantage to the examinees who cheat and undermines the legitimacy of all examinees’ scores. Most importantly, cheating may unleash incompetent, unethical, and potentially destructive professionals on the unsuspecting public. This article is intended to educate the bar admissions community on the process NCBE employs to detect cheating when it receives a request for cheating analysis and to propose steps that test administrators can take to prevent cheating in the first place.

Before discussing how NCBE conducts cheating analyses, it is important to emphasize that the best method for handling cheating is prevention. Prevention includes minimizing the opportunities for examinees to cheat, having vigilant proctors, and instituting severe penalties for cheating. Examinees are discouraged from cheating if they are aware that proctors are actively monitoring them for signs of cheating and that the penalties for cheating are severe.

Best Practices for Registration and Exam Day

Cheating prevention begins at registration. Clear information about prohibited items and behaviors should be provided to candidates in advance of the exam. Candidates should be checked to ensure that they satisfy all eligibility criteria, so as to avoid exposing test content unnecessarily. Also, candidates with a history of having been flagged for potential cheating during a previous test administration should be carefully monitored during the exam, regardless of whether those examinees were ultimately charged with wrongdoing.

Prevention activities are even more important on exam day. Each examinee must present authentic, current, government-issued ID bearing both a photograph and a signature. The examinee’s name should exactly match the information on the registration list, and the photo should match the likeness of the examinee appearing to test. Examinees should be asked to sign a testing agreement describing the security conditions and potential consequences for violating the conditions, and they should be instructed on what to do with personal belongings to ensure that they will be inaccessible during testing.

Prior to entering the testing room, examinees should be reminded that during the exam, they are not allowed to possess cell phones or wear hats, sunglasses, or baggy clothing. Head scarves, yarmulkes, and other items of religious significance may be worn during testing, provided that they are removed and inspected during check-in (often in a private room and by testing personnel of the same sex as the examinee). Examinees should be asked to turn their pockets inside out to verify that they are not concealing prohibited materials. Test administrators may also ask to inspect articles of clothing, such as by asking examinees to push up shirtsleeves or remove jackets or sweatshirts for inspection.

Examinees should never be allowed to select their own seats, but should be seated according to a prearranged seating chart, with adequate space between all examinees to make copying answers from a neighboring examinee difficult, while also providing ample room for proctors to move about the testing room and have unobstructed sight lines.

Test booklets provided by NCBE are sequenced to ensure that examinees do not receive the same test form as their neighbors. (Multiple forms, and the common approaches to developing them, are discussed later in the article.) The seating plan for each testing room should consider how test forms will be distributed so that nearest neighbors and those within line of sight do not have the same form. For example, if the seating plan is such that examinees are seated in a square with an aisle between them and those in front, the test forms should be distributed, after all examinees are seated, by alternating from one side of the aisle to the next, handing each examinee the preassigned booklet from the top of the pile. This should be mapped out in advance with the directions for distribution used to assign booklets to each examinee in the seating plan. By reviewing the seating plan with test forms identified, the testing site supervisor should be able to clearly see whether examinees will have other examinees with the same form in their visual field, enabling them to make any necessary adjustments to the distribution plan. It is also important that proctors understand how to replace a booklet if they observe a damaged test booklet when they are handing them out.

Finally, examinees who go on break during the exam, such as to use the bathroom, should be required to bring their exam materials to a proctor so that they are not left unsupervised during the break. Because breaks during the exam could provide an opportunity for examinees to discuss test content, test administrators should excuse only one examinee at a time to use the bathroom.

Effective Proctoring

Screening examinees and undertaking other cheating prevention measures, however, can only go so far. It is the ability of proctors to detect unusual examinee behavior that is both the best deterrent to cheating and the best method of detection. Examinees who feel that they are being carefully watched are less likely to risk being caught looking at another examinee’s test paper. Proctor reports of irregular behavior and the answer sheet comparison requests that they trigger are critical first steps in cheating detection.

The proctor’s task has become more challenging as cheating technology has become smaller, cheaper, of higher quality, and smarter. Technology is being integrated more frequently into common items, such as earrings equipped with Bluetooth listening devices; glasses, pens, and shirt buttons equipped with high-definition video cameras; and wearable smart technology, such as rings that can receive text messages or sleek designer watches that can access the Internet. As a result, the use of technology during an exam, either to capture content for later circulation or to communicate with others about test content during the exam, is emerging as a major threat to test security.

What Proctors Should Look For

Vigilant proctoring should be conducted using testing personnel who have been specifically trained on recognizing cheating behaviors, on the use and detection of cheating technologies, and on appropriate intervention strategies when examinees are suspected of cheating. During the test, cheaters frequently behave differently from examinees who are not cheating, and proctors can often identify cheaters by looking for those engaged in unusual behavior. Successfully identifying unusual behavior begins with understanding typical behavior. In a paper-based exam environment, most examinees focus on their exam materials and sit quite still throughout the entire exam, hunched over their materials, which lie flat on their workspace. In a computer-based testing environment, as is used by many jurisdictions for the written portion of the bar exam, candidates are similarly still and focused, with their attention oriented toward their computer screens. Deviations from this expected behavior should be monitored closely. Examples of unusual test-taking behaviors that may indicate cheating include

- looking off into the distance;

- repeatedly looking at another examinee;

- looking around the room;

- looking at proctors;

- looking at or fidgeting with one’s clothing or body;

- positioning one’s body awkwardly, especially to turn away from a proctor;

- covering or hiding one’s eyes;

- lifting exam materials off the workspace, especially to hold them parallel to one’s body;

- flipping pages back and forth in the exam booklet;

- using one’s hands or body as a signal (i.e., attempting to communicate nonverbally with another examinee);

- stretching or other gross-motor movements; and

- repeated excuses for leaving one’s seat, including frequent requests to use the bathroom.

Making the Best Use of Proctors

It is very important to have enough proctors available to adequately monitor the testing room(s) and restrooms. Having proctors walk through the aisles is a good way to establish a clear presence in the room, which may serve to deter cheating. However, it is also very predictable, so it is easy for the examinees to anticipate when proctors will be monitoring and adjust their behavior accordingly. Often, proctoring from the back of the room provides the best opportunity to observe unusual behavior because examinees are less aware that they are being watched. A combination of walking through the aisles and viewing from the back of the room may be the best way to both deter and detect cheating. Also, when a proctor observes behavior that is suspicious, it is helpful to alert another proctor so that the behavior can be monitored by someone else who can either corroborate or refute the observation. As important as proctoring is, proctors are also fallible. The goal should always be to have an accurate picture of the testing environment, and having independent observations from multiple sources goes a long way toward understanding whether the particular behavior was truly anomalous and how likely it is that the examinee in question was involved in some form of cheating.

If the proctors agree that the observed behavior is prohibited, the decision about whether or not to intervene hinges on the nature of the behavior. The policies and procedures determined by the jurisdiction’s board of bar examiners should be followed in all cases. The rule of thumb is that behavior that risks the immediate security of the test content itself (e.g., taking pictures of items, communicating item content to others external to the exam room, stealing test booklets or pages from test booklets, etc.) must be stopped immediately, and the prohibited technologies and stolen materials should be searched and/or confiscated. In the case of the use of technology, if the examinee is not willing to show the recent pictures, recordings, or web history, it may be necessary to contact the police.

If the immediate security of the test content is not at stake, as would be the case if an examinee is suspected of copying from or colluding with other examinees, best practice is to allow the behavior to continue. This will allow proctors to continue to monitor the examinee, thereby strengthening the observational evidence of misconduct. In addition, it will lead to greater answer similarity between the examinees in question. As will be discussed shortly, greater answer similarity between examinees will result in higher probabilities of detecting these examinees as statistically anomalous.

Reporting Proctor-Observed Suspected Cheating

If a proctor notes any suspected cheating behavior during the administration of the Multistate Bar Examination (MBE), test administrators are asked to note it on the MBE Irregularity Report (see Figure 1). For all cases in which an examinee is suspected of cheating, NCBE’s policy is to exclude the suspected cheater’s answers from the equating process. (The equating process, conducted by NCBE after each exam administration, statistically adjusts scores to account for differences in difficulty of questions from one administration to the next so that scores are comparable in meaning across administrations.) Excluding data that is potentially compromised is necessary even if the test administrator does not think that the cheating can be proved. If the examinee did not cheat, removing the valid answers of that one examinee among so many will have little impact on the results of the equating process. However, if the examinee did cheat and the invalid answers are included in the equating and subsequent scoring analyses, it can lead to a series of scoring problems that will affect scores for all examinees. Because of this, NCBE follows best practices and takes a conservative approach by removing all answer sheets identified as suspicious from the equating process.

Figure 1: MBE Irregularity Report

Using Multiple Exam Forms

Even with the most vigilant proctoring and the most stringent screening methods, it is not always possible to eliminate all cheating. Fortunately, even if no proctor observes an examinee cheating, it may still be possible to detect that cheating occurred. One approach employed to deter and potentially identify cheating is to utilize multiple forms of the examination. In testing, different forms contain items that differ from one another in substance and/or order of appearance. This is most commonly achieved in one of three ways. Test forms can be built with different test questions designed to measure the same knowledge with equal precision, and statistically equated to compensate for any small differences in difficulty that might exist between the forms so that the reported scores are equivalent for all forms. Another approach to developing different forms is to limit the differences in test content to the unscored questions being tried out for future use, with all scored items remaining identical across forms. In the third approach, the scored items remain identical across multiple forms but are presented to examinees in different sequences. These three approaches are not mutually exclusive, so testing programs can use any or all of these strategies for assembling different forms, such as combining unique tryout questions with scored items presented in scrambled order.

How Multiple Forms Deter Cheating and Assist in Cheating Detection

Multiple forms serve two important security functions. First, they are an important prevention strategy. When multiple forms are used, many individuals who attempt to copy answers will discover that they have a different form from that of their neighbor and will realize that copying is pointless. For those who do not realize that the forms are different, utilizing multiple forms may not prevent the copying behavior itself, but it prevents examinees who cheat from fraudulently passing the exam.

In addition, multiple forms can be used to detect cheating, even in the absence of proctor observation. When multiple forms are used, as a quality control measure, every answer sheet is scored using all of the possible answer keys (containing the correct answer option for each question on a test) just to make certain that the examinee did not mismark the answer sheet and indicate the incorrect test form. If an examinee receives a higher score using the answer key for a form that is different from the one indicated on his or her answer sheet, the answer sheet is flagged, and it is checked to be certain that the form designated on the answer sheet matches the one on the examinee’s test booklet. If the answer sheet does match the designated form, then we know that the test was scored correctly and the question arises as to why the examinee received a higher score for a different form of the exam than the one he or she was administered.

In some cases, it may be that the scores under all answer keys are close to what one would expect to result from random guessing, and the reason for the higher score with the wrong key might be a random occurrence. However, when the examinee’s score using the correct key is very low but his or her score using one of the alternate keys is substantially higher, possibly even approaching or exceeding the passing score, it is usually a strong indication that the examinee copied answers from a neighbor.

Following an answer key analysis, it is NCBE’s practice to notify the jurisdiction of any anomalies that are consistent with what we would expect of an examinee who was copying answers from someone taking an alternate form of the exam.

Conducting a Cheating Investigation: The Answer Sheet Comparison Report

When credible evidence exists that an examinee may have cheated on the bar exam, and before taking any action against the examinee, it is important to conduct a thorough investigation. There are multiple pieces of information that could potentially trigger an investigation; however, for the bar exam, visual evidence detailed in a proctor report and scoring significantly higher with an alternate form answer key are overwhelmingly the two most common triggers.

The first step in an investigation is usually a request that NCBE conduct a statistical analysis of the suspected examinee’s answer pattern and produce an Answer Sheet Comparison Report (ASCR). Examinees who cheat leave a stamp on the data, which can be uncovered through careful statistical analysis. For example, when an examinee copies many answers from a neighboring examinee, it is expected that the two examinees will have answer patterns that are unusually similar to each other. One way to evaluate the extent of similarity between two examinees is to compare their similarity with the similarity between pairs of examinees for whom copying was not possible.

One straightforward and informative way to do this is to first pair each examinee who took the same form of the test as the suspected copying examinee with another examinee testing in a different jurisdiction who was administered the same test form as the suspected source examinee, and to compute some measure of similarity between them—for example, the number of answer matches. Given typical MBE testing volumes, this approach results in between 2,000 and 6,000 distinct pairs of non-copying examinees. This group can then be used as a baseline or benchmark against which the targeted pair may be compared. Targeted pairs with similarity measures that are very high relative to the baseline are flagged for further review.

How the ASCR Is Used

The ASCR provides results for four different similarity measures, reflecting different aspects of similarity between the answers provided by the suspected copier and the suspected source. (These four measures are discussed later in the article.) Each index can be sensitive to a different type of cheating behavior, so it is not necessary for all indexes to be unusual in order for a jurisdiction to take the next step in the process. Unusual similarity for each of the four indexes is described at four extremity levels.

The first extremity level is of “No Unusual Similarity.” This means that in randomly combined pairs of examinees taking the same forms as the suspected copier and suspected source, more than 5% of the pairs have values of the index as large as or larger than the one observed. If this level of similarity is found for all four indexes, there is insufficient statistical evidence of misconduct to pursue any further action, unless there is incontrovertible physical evidence of cheating (e.g., use of a communication device, recovery of a document with stolen test items, etc.).

The second level is labeled “Somewhat Unusual Similarity” and means that at most 5% of the pairs have an index value at or beyond the level observed for the suspicious pair. This evidence level is usually the lowest level for which NCBE would recommend moving forward with an investigation, and then only if at least two of the indexes achieve this level of significance and there is reliable and compelling observational evidence of misconduct.

The third level is “Moderately Unusual Similarity” and means that at most 1% of the pairs have an index value at least as large as what was produced by the pair of examinees under investigation. NCBE would usually recommend moving forward with an investigation if any of the four indexes achieves this level of significance, provided that the proctor report raised serious concerns about potential misconduct by this pair of examinees.

The fourth and most compelling extremity level is labeled “Very Unusual Similarity” and means that at most 0.1% (1 out of every 1,000) of the pairs have values this large or larger. In any situation in which proctors observed suspicious activity by the pair of examinees in question, this level of significance is sufficient for NCBE to recommend moving forward with an investigation. However, jurisdictions may choose to further pursue any pairs flagged at the Very Unusual Similarity level, even in the absence of visual evidence by proctors, provided that there is some additional reason to be suspicious of this pair (e.g., one of the examinees produced an unusually high score using the answer key for the other examinee’s test form, etc.).

Submitting an ASCR Request

NCBE prepares an ASCR exclusively on a request basis and only for specific examinees and their accompanying suspected source examinees. To request an ASCR, jurisdictions must complete an Answer Sheet Comparison Request Form (see Figure 2). On this form, the test administrator must provide the applicant numbers of both the suspected copier and the suspected source, as well as the test session(s) in which the suspected copying was observed and the question numbers involved. If the trigger for the ASCR request is that an examinee performed better on the wrong form than on the one he or she was administered, jurisdictions often submit ASCR requests with multiple suspected sources. The potential sources are those for which the examinee in question had the best line of sight. As a result, a seating chart must accompany the ASCR, indicating the location of the suspected copier and any potential source examinees.

Figure 2: Sample Answer Sheet Comparison Request Form

Analyzing the ASCR

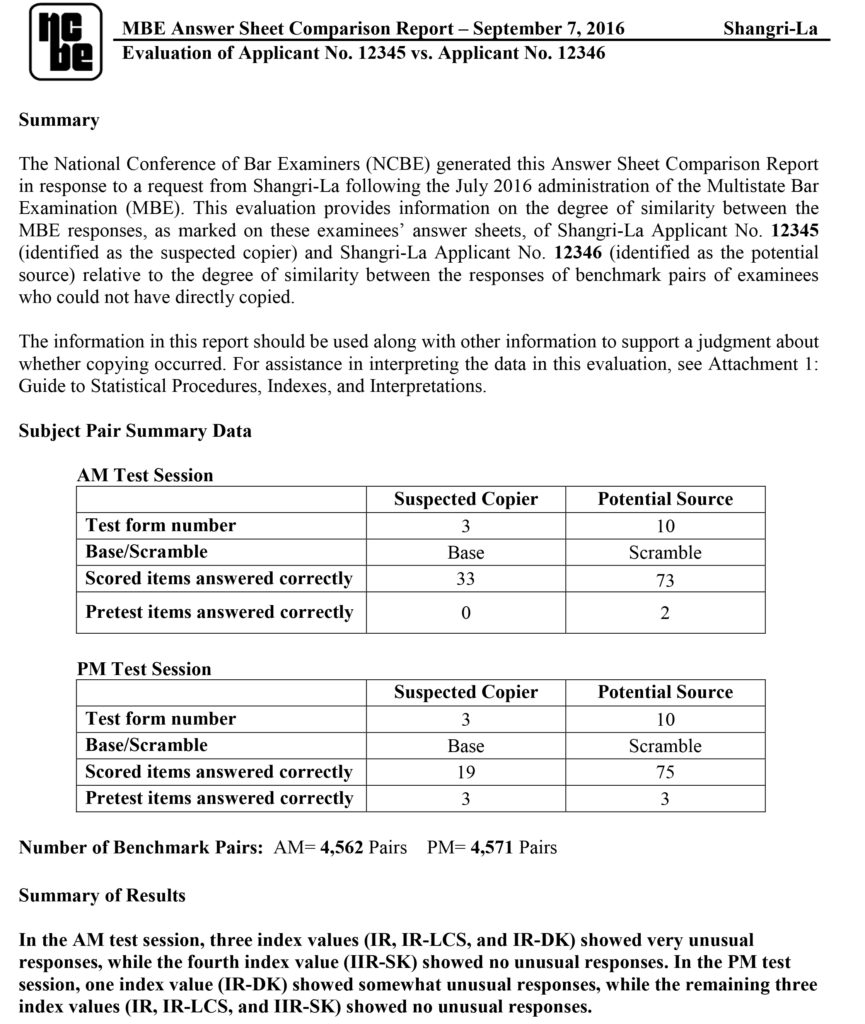

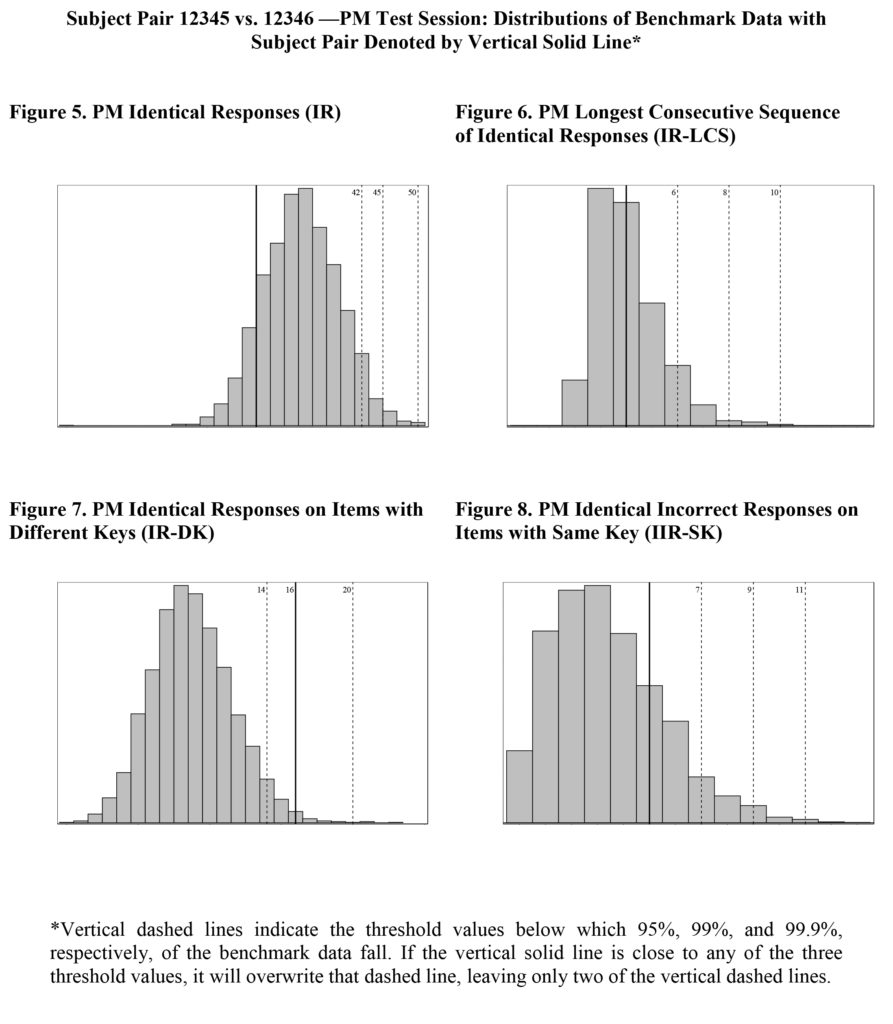

Figure 3 at the end of this article provides an example of an ASCR investigating possible answer copying for a specific pair of examinees: 12345 (the suspected copier) and 12346 (the suspected source). Using the logic described above for analyzing unusual similarity, the ASCR investigates four different indexes of response similarity for the AM and PM sessions separately: (1) the total number of identical responses, (2) the longest string of consecutive identical responses, (3) the total number of items for which the correct answer was different on the forms completed by the two examinees, but for which their answers were identical, and (4) the total number of items with the same correct answer, but for which the two examinees both selected the same incorrect answer. Results in the ASCR are provided in tabular form, showing the index value and its corresponding percentile rank (based on the benchmark data set) as well as a qualitative description of the extremity level. The ASCR also provides these same data graphically. For each index, the report shows the benchmark distribution, the location of the index value for the examinee pair under investigation, and the location of the thresholds between the four extremity levels.

Consulting an Outside Expert for Further Analysis

When a jurisdiction decides to initiate an investigation into possible cheating, NCBE recommends that an outside expert on test security be consulted. An independent expert on test security can be especially important if there is a desire on the part of the jurisdiction to take action against an examinee for cheating (e.g., cancel the test score, deny admission to the bar on character and fitness grounds, etc.). Such a consultant should be able to conduct analyses that are more sophisticated than are the screening tests run by NCBE, such as by considering the suspected copier’s overall test performance and the specific answers provided by the suspected source examinee(s). The consultant should also be experienced in giving testimony in court, because of the distinct possibility that any action taken by the jurisdiction will result in a legal challenge from the examinee. An independent expert is especially critical if action is being taken on the basis of statistical results alone and there is no proctor report of copying behavior having been observed.

Conclusion

Investigating possible cheating is an important and complicated endeavor, which must be done very carefully. Cheating on tests, including the MBE, is a very real problem, but several good strategies exist to deter and prevent it from happening, and to detect it in the event that prevention strategies are ineffective. Most importantly, if a test administrator has any reason to suspect that copying or answer sharing occurred during an MBE administration, it is very important that he or she complete an Answer Sheet Comparison Request Form so that an investigation into possible misconduct can be further informed by the data.

James A. Wollack, Ph.D., is a professor in Quantitative Methods in the Department of Educational Psychology at the University of Wisconsin–Madison; he also serves as the director of the UW–Madison Testing and Evaluation Services and the UW System Center for Placement Testing.

James A. Wollack, Ph.D., is a professor in Quantitative Methods in the Department of Educational Psychology at the University of Wisconsin–Madison; he also serves as the director of the UW–Madison Testing and Evaluation Services and the UW System Center for Placement Testing.

Mark A. Albanese, Ph.D., is the Director of Testing and Research for the National Conference of Bar Examiners.

Figure 3: Sample Answer Sheet Comparison Report (ASCR)

Contact us to request a pdf file of the original article as it appeared in the print edition.