This article originally appeared in The Bar Examiner print edition, June 2016 (Vol. 85, No. 2), pp 4–9.

By Erica Moeser Thanks to so many bar examiners, bar administrators, and state Supreme Court justices for attending NCBE’s 2016 Annual Bar Admissions Conference in Washington, D.C., this April. The program garnered favorable reviews, and we are basking briefly in the afterglow of those positive reactions before turning to development of the 2017 edition, which will be held May 4–7 in San Diego. We devote tremendous institutional resources to presenting a meaningful educational offering every year and believe that the investment of time and treasure is consistent with our mission as a nonprofit.

Thanks to so many bar examiners, bar administrators, and state Supreme Court justices for attending NCBE’s 2016 Annual Bar Admissions Conference in Washington, D.C., this April. The program garnered favorable reviews, and we are basking briefly in the afterglow of those positive reactions before turning to development of the 2017 edition, which will be held May 4–7 in San Diego. We devote tremendous institutional resources to presenting a meaningful educational offering every year and believe that the investment of time and treasure is consistent with our mission as a nonprofit.

While we were assembling for the conference in Washington, we received word on April 14th that the ink was drying on a decision of the New Jersey Supreme Court to adopt the Uniform Bar Examination effective with the February 2017 administration. It was a great way to start the weekend. Now we are at 22. Welcome, New Jersey!

The UBE is under active consideration in a number of states. For those jurisdictions that are already administering it, it has become business as usual with no ripples of change and—more importantly—no signs of discontent. For those jurisdictions that are dipping toes into the water to explore its appropriateness for them, there seem to be two areas that generate a level of concern or skepticism.

I encourage skeptics to go directly to UBE user jurisdictions to learn what issues were raised prior to adoption and what the outcomes have been. Those who have implemented the UBE can provide unfiltered and authentic information about their experiences.

The first issue that tends to pop up concerns whether and how to test on state law distinctions. A number of jurisdictions have taken the position that the UBE itself is sufficient as an entry-level test of basic competence and therefore no additional element testing knowledge of state law is necessary. Others that have taken a different approach to state-specific law have developed various models for doing so. These range from live courses to online instruction (with or without online testing). Missouri and Arizona led the way in this regard.

The latest entrants to the online approach are Alabama and New York. All of the jurisdictions that have devised state-specific components have been happy to share expertise with new adopters of the UBE and have been willing to provide information about the processes used to develop their materials. We have now moved far beyond merely predicting that a variety of models will work. They now exist in several forms, and they have been successful in meeting the objectives of the jurisdictions that have implemented them.

The second issue concerns the impact on passing scores when the UBE is adopted. This includes whether the UBE causes a drop in the percentage of passing examinees and whether minority examinees are adversely affected by the adoption of the UBE.

As to the first of those issues, there is nothing inherent within the UBE that causes a drop in scores leading to a declining percentage of passing examinees. Almost all jurisdictions scale their written components to the Multistate Bar Examination. It is that process, and not the fact that the written component is different, that governs whether the examinee achieves a passing score. The fact that the test is the UBE is inconsequential.

As has been written in this magazine and elsewhere repeatedly, the MBE is an equated exam. The result of the equating process is that a score reported from a prior MBE administration has the same meaning as one earned currently. Drops in examinee performance cannot be attributed to a change in the MBE—other factors are responsible.

It is now well established that the declining bar performance currently being observed in many jurisdictions has roots in the relatively recent decline in law school applications. The MBE is a trustworthy marker that is relevant to assessing the institutional impact of documented declines in the entering credentials of students at the bottom of many law school classes. There is a correlation between performance on the Law School Admission Test (LSAT) and performance on the MBE. (Having said that, it is fair to add that often there is a higher correlation between law school rank in class and performance on the bar examination.)

Almost every jurisdiction that has adopted the UBE has retained its existing cut score—that is, the point at which examinees pass or fail. Exceptions are Washington, which was not using the MBE or any NCBE testing product and thus converted from an all-essay format to the UBE format; and Montana, which coupled adoption of the UBE with an increase to its cut score. To the extent that a jurisdiction changes the weighting of its MBE and written components when it adopts the UBE, there will be a difference in who passes and fails—but not in the overall number of those who pass and fail.

As to the question of minority performance on the UBE, little information exists. The one significant piece of research of which I am aware was done by the NCBE research staff at the time New York undertook its exhaustive review of the UBE before recommending its adoption to the New York Court of Appeals. The work was possible because New York, unlike almost every other jurisdiction, collects demographic data. (It vexes me when critics make baseless accusations about both the UBE and the MBE, or otherwise sow seeds of doubt, when their own states do nothing to collect and assess demographic data for their own test takers.)

We at NCBE are limited in what we are able to study about the relationship between law school predictors and bar performance. A data-sharing agreement with the Law School Admission Council has lapsed, and therefore our earlier work in this area cannot be replicated with current data.

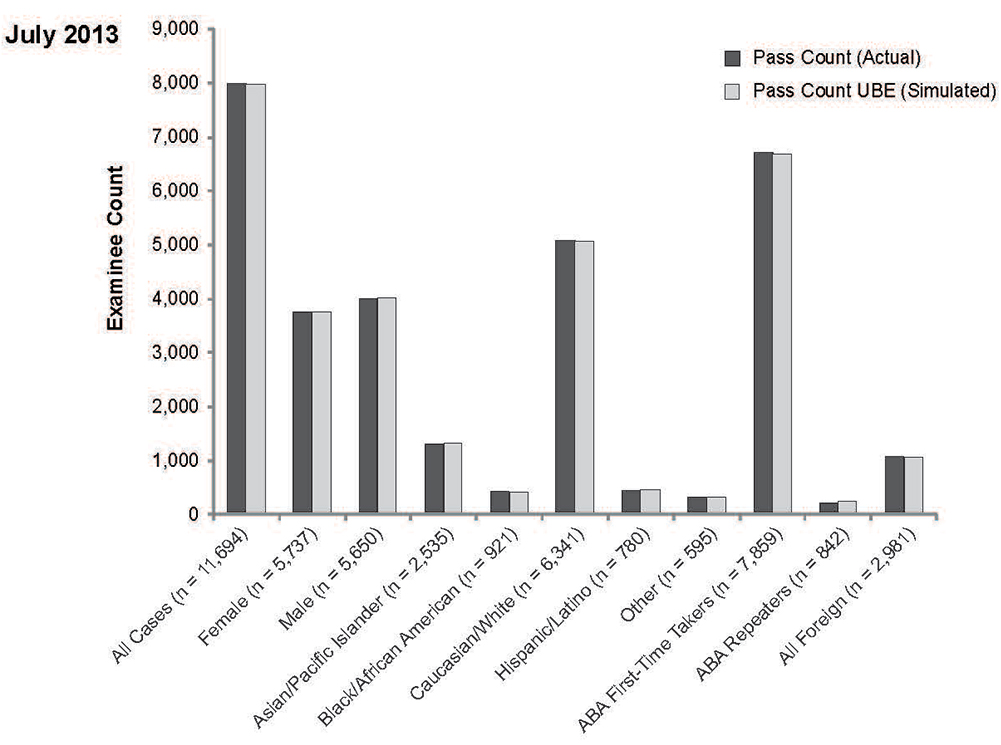

In the New York study, our research staff looked at the results of the February and July 2013 New York bar examinations and created what it believed to be the best simulation available to predict the outcomes were that same test population to take the UBE. This was done by weighting the actual MBE scores of the examinees at 50% (the UBE weighting), doubling the actual Multistate Performance Test score (New York used only one MPT) to a 20% UBE weight, and using the New York essay scores as a surrogate for the Multistate Essay Examination and assigning them the UBE weight of 30%. By using the same examinees and the same weightings for written tests that were comparable, NCBE was able to determine that the adoption of the UBE in New York, assuming the same cut score, would have little effect on the numbers who passed and failed across categories.

A graph depicting the findings of the simulation based on the July 2013 test results is shown below.

New York Bar Examination Actual and UBE-Simulated Pass Counts

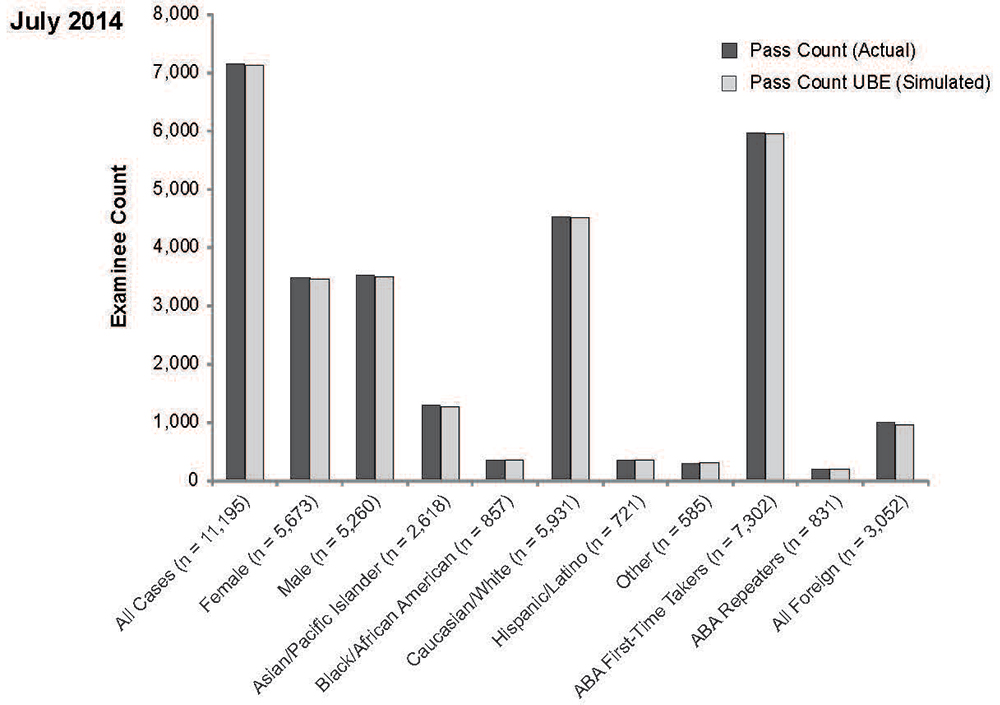

We were interested, as was New York, to see if performing the same simulation for the following July would produce a similar profile, and it did. We again used actual New York data from the July 2014 New York bar examination and configured the data as though the UBE were being administered. The graph depicting the findings of the simulation based on the July 2014 test results is also shown above.

It is noteworthy that the effect on minority examinees was negligible. Further, we saw that those repeating the examination actually fared slightly better under the UBE model in both 2013 and 2014.

As to an underlying or accompanying concern that minority examinees fare worse on the MBE than on other parts of the UBE (or, for that matter, on any bar examination), that issue has been laid to rest on more than one occasion and has been reported in the pages of this magazine. Minority performance on the MBE is not materially better or worse than it is on other portions of the bar examination. While this speaks to matters of deep concern about educational opportunities in America, it does not give credence to charges that the MBE stands out as a barrier to entrance to the profession.

To the extent that jurisdictions express concern about the success of minority examinees on the UBE, note that any examinee who fails a UBE jurisdiction’s bar examination but earns a score that meets the requirement for passing in another UBE jurisdiction is eligible to transfer it to any jurisdiction for which it is a passing score. This is a tremendous benefit, and I find that many who are meeting the UBE for the first time do not fully appreciate this as a way to move law graduates into the legal profession. Some employment opportunities, such as federal positions, do not require relocation once there is one bar admission in any jurisdiction.

In the December 2015 issue of this magazine (President’s Page), I presented information about the variation in state-by-state MBE means that garnered many positive comments. Evidently many people did not (or do not) understand that pass/fail decisions are made by jurisdictions using the state MBE means and standard deviations rather than the national mean and standard deviation that we customarily report.

Graphs of recent national MBE means from February and July test administrations are shown below.

MBE National Means, 2007–2016

As a matter of research interest, the historical patterns of MBE means from 10 illustrative jurisdictions with substantial numbers were graphed against the national means; these graphs are shown below.

MBE Means of 10 Jurisdictions, with National Means Shown, 2007–2016

From experience, I know that each jurisdiction’s performance as measured by the MBE mean tells its own story. There are many variables—too many to list here. The import of these graphs is to underscore that there are factors that benefit from close analysis any time a jurisdiction’s bar examination results are interpreted. Generalizations about bar performance are just that.

In closing, I would like to mention that I am approaching the end of my trail ride with NCBE. I will retire by the end of 2017. A search committee headed by Judge Thomas J. Bice of Iowa, the current NCBE chair, is at work setting up the process by which my successor will be chosen. Members of the search committee include Justice Rebecca White Berch of Arizona, David R. Boyd of Alabama, Gordon J. MacDonald of New Hampshire, Judge Phyllis D. Thompson of Washington, D.C., and Timothy Y. Wong of Minnesota. I am certain that the committee will welcome expressions of interest and nominations once the process is announced.

There is time enough for me to thank those who have made my years with the Conference such good ones, so I will defer those sentiments to a future column.

Melissa Cherney, NCBE’s Multistate Professional Responsibility Examination Program Director, is going to beat me out the door. Melissa is retiring after nine years of exemplary service, during which NCBE brought all MPRE test development activities in-house and transitioned test administration from one national vendor to another. As with any great scout, she leaves this particular campsite in much better shape than she found it. We thank her for that and wish her the happiest of retirements.

Contact us to request a pdf file of the original article as it appeared in the print edition.